The big tech companies have offered up an array of AI initiatives to drive their future growth and the value they add to their marketplaces. Here is a look at the key highlights from Microsoft’s (NASDAQ: MSFT) recent conference (Microsoft Build) and from Nvidia’s (NASDAQ: NVDA) strong outlook from their latest earnings release.

These events have underscored how these two companies are thinking about the future direction of AI for their businesses and the potential opportunities that lie ahead.

Microsoft’s Five Big Changes

At the Microsoft Build conference last week, CEO Satya Nadella said that every aspect of the tech stack is changing. Then, he noted that there are close to 50 changes happening at Microsoft, but highlighted the following five big ones:

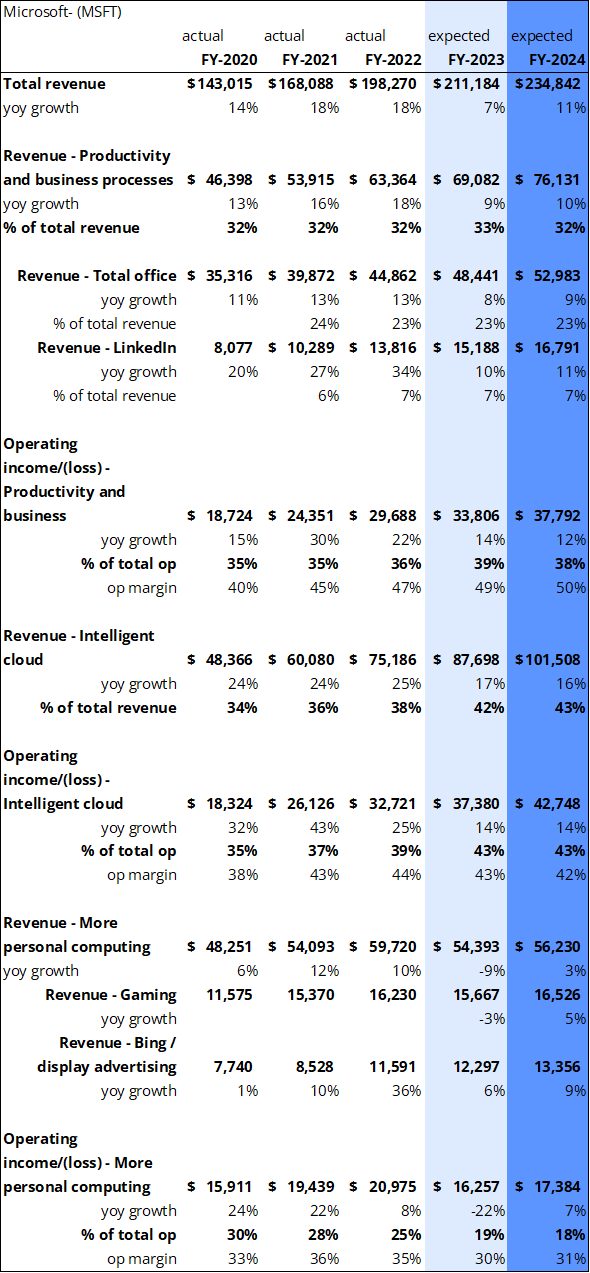

- Bing to ChatGPT: The company plans to leverage AI to create better search results. Bing ad revenues are projected by analysts to hit $13 billion by the end of FY 2024. However, the size of the global digital advertising revenues market is enormous. While Microsoft remains behind Alphabet (GOOGL) in search, could their superior AI give them the edge to take a greater share of the digital advertising market?

- CoPilot to Windows: In his presentation, Panos Panay explained that it is a great time to be a developer on Windows and he believes that Windows is the best endpoint for AI. With 1.4 billion monthly active devices running on Windows, CoPilot will enable developers to build applications for new ways to work within Windows. Total Office revenues are estimated by analysts to be $53 billion by the end of FY 2024, which may be a conservative projection given the amount of innovation happening in this area. Is Microsoft aiming to be the workplace productivity platform by bringing innovations to the PC?

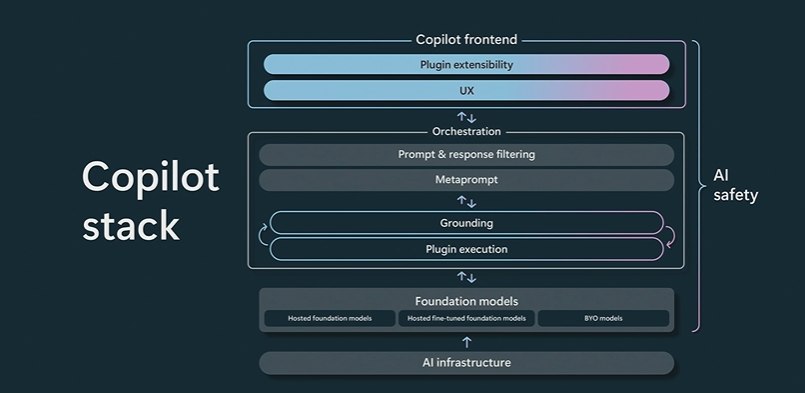

- CoPilot Stack: Microsoft AI CTO Kevin Scott highlighted the end-to-end platform that will start with Azure and be the cloud for AI. The platform will enable developers to run powerful AI models on a Windows PC and to create true AI hybrid applications that span from Edge to the cloud. Given the enhancements to the tech stack, how can more automation and efficiency in their tech stack support higher margins?

Figure 1: Microsoft CoPilot Stack

Source: Microsoft Build (May 24, 2023)

- Azure AI Studio: This is the toolkit to build an intelligent AI app and deploy it safely. This will enable developers to build and train models and to ultimately make them safe and compliant by embedding these checks within the development lifecycle. There is healthy skepticism about the safety of AI and Microsoft’s role in driving that, but by building safety into the toolchain Microsoft aims to reduce deployment risk. The Intelligent Cloud business at Microsoft is expected by analysts to generate $100+ billion by the end of FY 2024 with a 42% operating profit margin. Will Azure AI Studio’s ability to enable high levels of data safety drive revenues?

- Microsoft Fabric: Since all AI applications will start with data, Fabric will be the data analytics stack for AI. It will unify storage, compute, experience, governance and the business model. Developers can use the same compute infrastructure for SQL or Machine Learning. According to Nadella, this will drive the data layer for the next generation of AI applications. What role will Microsoft Fabric play in driving Microsoft’s next leg of growth?

Figure 2: Analysts’ expectations for Microsoft

Source: Visible Alpha (May 30, 2023). All dollar figures are in millions.

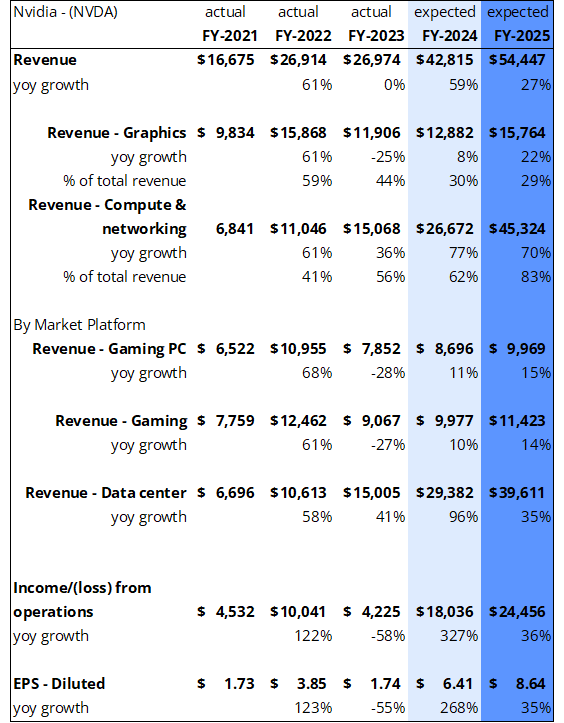

Nvidia’s Big AI Moment

Nvidia announced a strong beat in Q1 and guided revenues to $11 billion, $4 billion ahead of consensus for Q2, with gross margins expected to expand from 57% in FY 2023 to 68% in FY 2024. The company highlighted strong demand for their products in data centers, driven by their customers racing to integrate AI. In response to the Data Center demand visibility into H2, the company has procured supply that is substantially larger. In response to this news, the stock was up as much as 26% on the day after the earnings release.

Figure 3: Analysts’ expectations for NVIDIA

Source: Visible Alpha (May 30, 2023). All dollar figures with the exception of EPS are in millions

Accelerated computing is a full stack problem: According to Nvidia CEO Jensen Huang, $1 trillion of data centers are populated by CPUs, not accelerated computing. He believes CapEx dollars will lean into generative AI infrastructure (Training and Inference) over the next 4-5 years. With generative AI moving toward accelerated computing, this shift is driving significant order flows to build out the accelerated computing in data centers. Prior to the earnings release, revenue from data centers was expected to generate $23 billion by the end of FY 2025, but is now expected to reach nearly $40 billion.

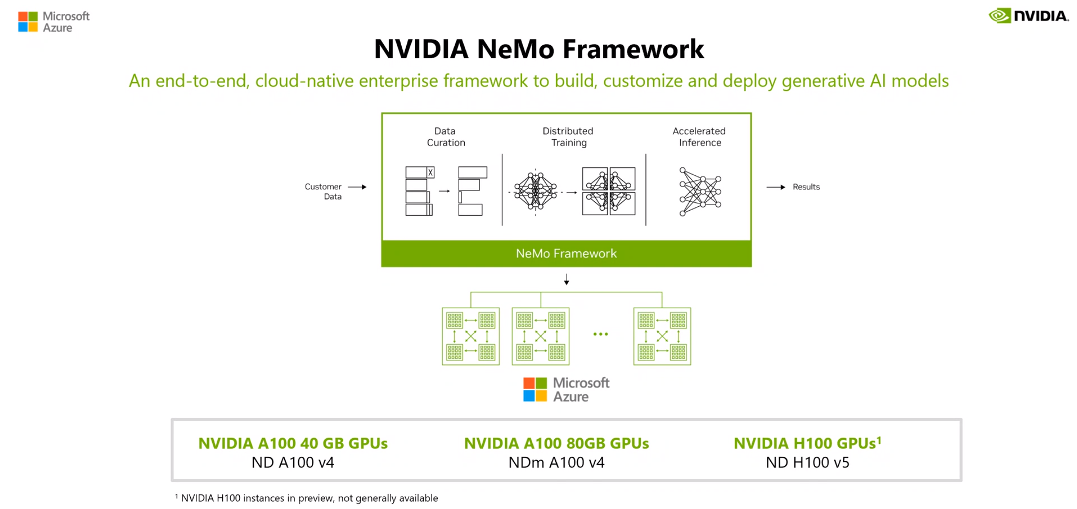

Figure 4: From data to results: How Microsoft and Nvidia will work together

Source: Microsoft Build (May 24, 2023)

As the gold standard in AI infrastructure, Nvidia together with Microsoft will enable Machine Learning Training and Inference. In addition, this partnership will enable an Omniverse cloud to live within the Microsoft Azure cloud which will allow new applications to be built and serviced together. Also, Nvidia’s CUDA applications can operate with Windows and allow for integration of existing software. It is worth noting that Amazon AWS is investing in their own customized Machine Learning chips for Training and Inference.

The pace of potential growth in computing and networking is driving lofty analyst expectations for Nvidia. Prior to the earnings release, consensus revenue estimates for 2025 were $37 billion with EPS at $4.87/share, but have since jumped up to nearly $55 billion in revenue and $8.64/share. Given the demand for the company’s products and the potentially large size of the market, how fast will it take Nvidia to hit $100 billion in revenues?

The bottom line

The potential for AI developers to create the next generation of must-have applications at scale has only just started. Over the next 4-5 years, there will likely be significant CapEx investment in data centers, development, and integration to ready the world for what’s to come next. The magnitude of the shifts is likely to blur the lines between cloud, chip, software, and search providers. Both workplaces and consumers are likely to win in the end with enhanced efficiency and productivity coupled with better-curated information. Like it or not, AI is here to stay.