The big tech companies have offered up an array of AI initiatives to drive their future growth and the value they add to their marketplaces. Nvidia (NASDAQ: NVDA) has positioned itself as a key leader in supporting the growth and scalability of the Generative AI (GAI) space. Here is a look at the key highlights from the recent keynote by CEO Jensen Huang, at the computer graphics conference, SIGGRAPH 2023, and a preview of Nvidia’s upcoming earnings release.

The enormous potential shift in data centers to reconfigure and adopt accelerated computing positions Nvidia to help drive the future direction of AI. The potential opportunities that lie ahead for democratizing AI will enable the integration of GAI into many different applications. According to Huang, “the iPhone moment for AI” is here.

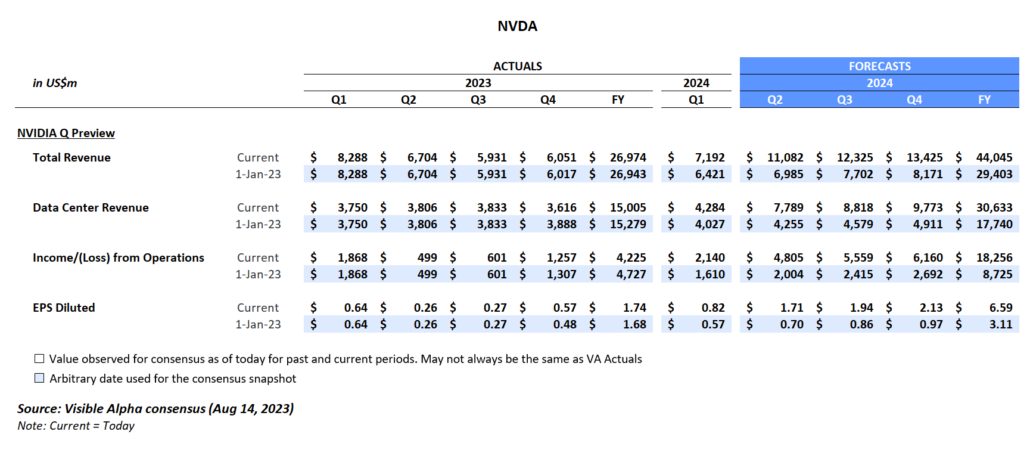

Expectations coming into Q2

Investor questions coming into the Q2

- Will the strong demand for Hopper GPUs continue?

- With the recent upward revisions to the data center business segment, is there still room for outperformance in Q2?

- Given the new announcements at SIGGRAPH, could we see upside to other divisions, like gaming?

Takeaways from SIGGRAPH 2023

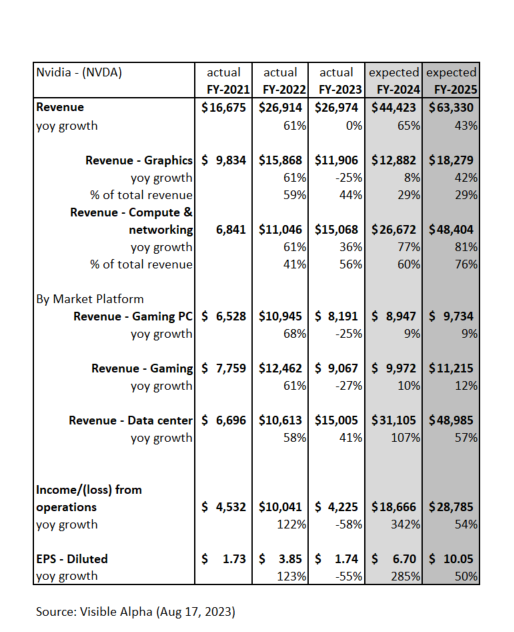

Nvidia’s revenue from Graphics is expected to grow 42% year-over-year to $18 billion by FY2025, driven by data center revenue growth. Speaking as the keynote at the SIGGRAPH 2023, a conference focused on computer graphics, CEO Jensen Huang walked through his vision and showcased several new product enhancements with GH200, OpenUSD and Hugging Face.

Based on Huang’s presentation, he believes it is possible to learn the representation of anything with structure — language, mathematics, vectors, audio, animation, 3D, DNA, proteins, chemicals. He explained that the revolutionary transformer model enables learning from a large amount of data. The model supports finding patterns and relationships, and learning these. In turn, this creates learning the representation of anything with structure and enables it to be replicated digitally (e.g., Digital Twins). The combination of a generative model and a learned language model can guide the auto-regressive diffusion models to generate anything with structure, and this guidance can happen with human natural language. In summary, accelerated computing has synced with deep learning, and the big bang of AI has begun.

All this potential learning to come from the models has significant implications on not only tech infrastructure and the stack, but also its use and application within enterprises and, more broadly, to the masses. It has the potential to enable testing and experimentation in a digital beta world that resembles real 3D physical spaces. These capabilities may move us closer to a virtual assistant fluent in all languages, autonomous driving, and new drug development. In addition, there are potential entertainment applications for short video, games, and film.

In his keynote, Huang highlighted several new product-related announcements related to driving accelerated computing in the data center segment, and this line item is where growth in new product innovations will likely continue. However, given these enhancements, gaming is another segment we are keeping an eye on.

GH200: 20x less power and 12x less cost

The basic scale out of the cloud historically was based on off-the-shelf CPUs, but general purpose computing is not the best way to do generative AI (GAI). GAI requires data centers wired for accelerated computing. According to Huang, accelerated computing is a giant step up in efficiency throughput, because it will take 20x less power and 12x less cost. This efficiency can support the expansion of investment in training and inference of any large language model and scaling it out. Huang further noted that future frontier models will start to become mainstream and scaled out.

Moving toward this vision, NVDA showcased a new processor for GAI with a new boost of memory (HBM3) that will now be connected to Grace Hopper (GH200). Huang stated that the chips are in production, will sample at year end, and be ready by the end of H1 next year. This processor is designed for scale out of the world’s data centers and may have significant implications for the pace that GAI is adopted.

The data center segment is 75% of total revenues and represents the critical growth driver for the company. Visible Alpha consensus currently projects the data center segment to hit $30 billion in revenue this year, up 2x from the previous year. Looking ahead to 2024, analysts expect growth to jump over 50% with sales from this segment to reach $46.5 billion. The most aggressive analysts are projecting the company to deliver $50 billion in revenues for the data center segment alone.

How may GH200/HBM3 drive new revenue growth beyond current expectations to the data centers segment?

Source: SIGGRAPH 2023 (August 8, 2023)

What about the other revenue segments?

Gaming is projected to generate $10 billion in revenues this year, but to grow only 13% next year. Expectations remain lackluster when compared to the data center revenue growth but may ultimately provide more room for surprise longer term. It will be interesting to see if Huang’s announcements about OpenUSD may support better than expected growth for the gaming division going forward. There seem to be significant opportunities to apply OpenUSD and GAI to gaming and the other divisions. The company’s professional visualization, automotive, and OEM segments remain small, but given the potential changes happening with GAI there may be significant room for these businesses to grow.

Source: SIGGRAPH 2023 (August 8, 2023)

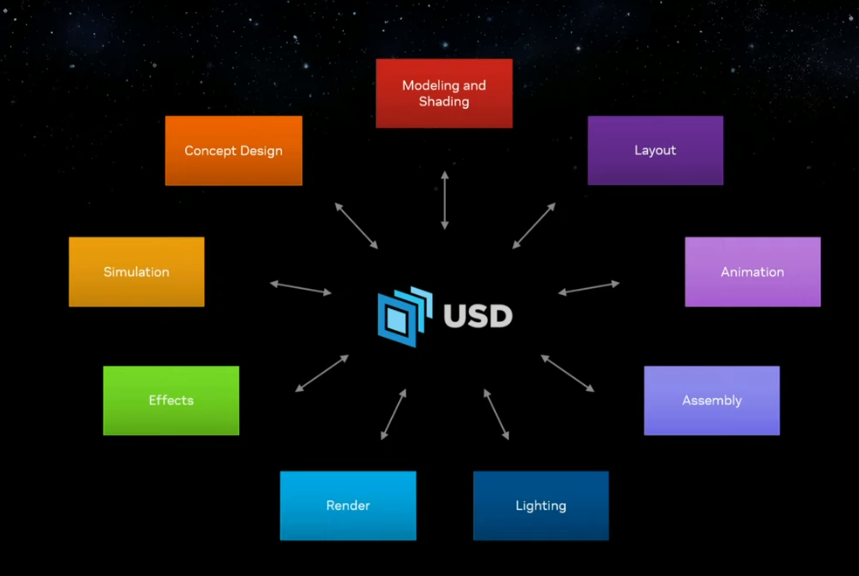

At SIGGRAPH, Huang highlighted the new Omniverse release featuring GAI and OpenUSD, designed to unify 3D. OpenUSD brings the world together into one 3D interchange. According to Huang, it will be what HTML did for the web. Taking this a step further, the Omniverse and GAI need each other, because it will enable the creation of a physical world simulation. This digital simulation can be a digital environment to train a model about consequences and potentially, common sense. AI can learn in a virtual world and help to create more realistic digital worlds. There are significant potential opportunities to apply this to testing, gaming, and enterprise business tools.

Hugging Face

To democratize AI and support the streamlining of the training of models for individual enterprises, Nvidia has partnered with Hugging Face, the world’s largest AI community, to offer a new service to enable training directly on the Nvidia DGX cloud. Training models directly can support both more scalable and more curated integration into a new or existing legacy application, which is ultimately what can bring GAI to life for large organizations or individuals.

DGX footprints are going to be set up in the major cloud providers — Azure, Oracle, and Google. In addition to performance improvements, the servers used with L40S Ada Lovelace GPUs are going to be used for mainstream models that can be downloaded from Hugging Face. Or, NVDA can create models to be used in enterprise applications and work with a company via Nemo and scale enterprise level LLMs. These enhancements suggest that there continues to be substantial opportunity in this new AI era.

Final Thoughts

All eyes are on the quarter for NVDA. In particular, investors are keen to see how the company will guide and what new details will come out of their earnings call about the future trajectory of its data center business. In addition, it will be interesting to see if the company gives new details about the gaming segment and its other smaller business lines and the potential upside from the OpenUSD and Hugging Face partnerships. Overall, investors want to see if GAI momentum has legs to continue or if NVDA’s stock may take a breather in the event that expectations and its outlook are merely in line.