Here is a look at the key highlights from Microsoft’s (NASDAQ: MSFT) recent conference, Microsoft Build 2024. The conference underscored how Microsoft is thinking about the future direction of AI for their business and the potential opportunities that lie ahead.

Key highlights from Microsoft Build 2024

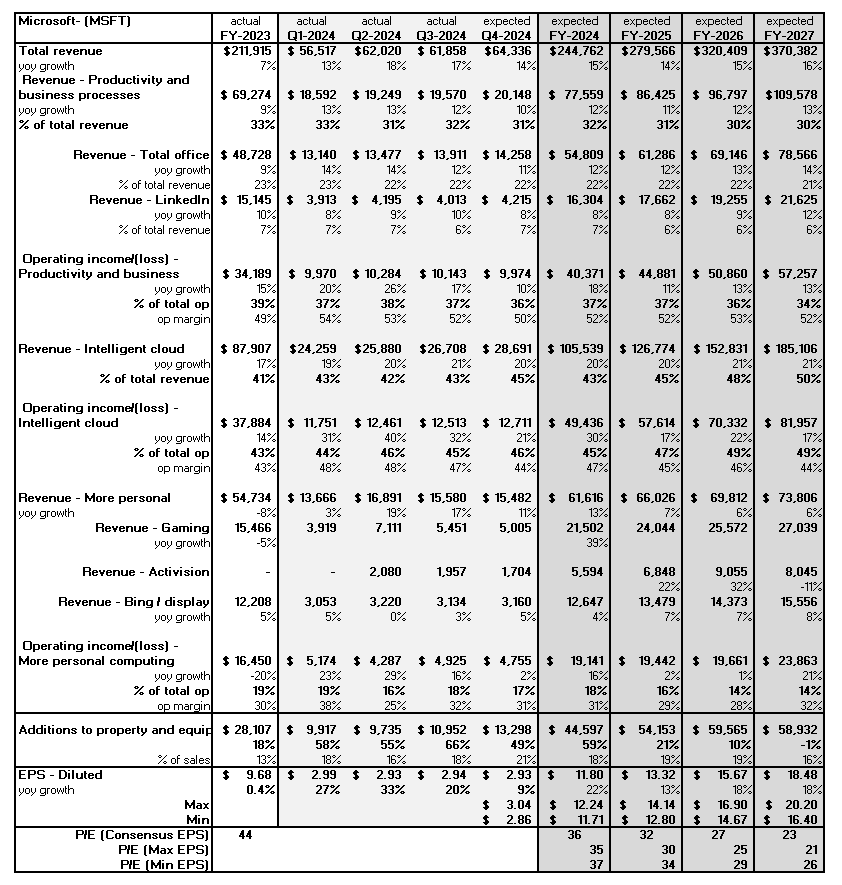

At the Microsoft Build conference last week, CEO Satya Nadella reiterated that every aspect of the tech stack is changing. Then, he highlighted the key updates. These innovations seem to position Microsoft’s Azure well for future growth. Based on Visible Alpha consensus, the Intelligent Cloud business is projected to grow from $105.5 billion this year to $185.1 billion over the next three years. This strong growth is driven by Microsoft Azure revenue, which is expected to grow from nearly $75 billion this fiscal year to over $150 billion by the end of fiscal year 2027 and to generate a 45% operating margin.

Here are some of the key takeaways:

1) Smarter models are coming: Microsoft’s CTO and EVP of AI, Kevin Scott, and Open AI CEO, Sam Altman, took the stage and highlighted what’s next for Generative AI (GAI). GPT-4o, the latest multi-modal model trained on Azure, has text, audio, image and video. According to Scott, engaging with ChatGPT is going to get cheaper and faster. In a demo of the latest version of GPT-4o, users can interrupt the model and get it to pivot. This is an important step to advancing users’ interactions with the technology.

A GAI layer is going to be built into every product and service, according to Altman. The goal is to make it easy for users to engage with GAI. Based on Altman’s comments, ultimately, this is going to help the models be more robust, safer, and useful. It will also enable the model to get smarter, which will reduce the price and increase the speed.

Figure 1: Microsoft’s CTO and EVP of AI Kevin Scott and Open AI CEO Sam Altman

2) Laptops for the AI era: In a special session on Monday, May 20, 2024, ahead of the Microsoft Build presentations, Microsoft introduced a new category of Windows PCs designed for AI: Copilot+ PCs. Pre-orders started last week and these new AI laptops will be available from June 18, 2024, starting at $999. According to Microsoft, Copilot+ PCs are notably faster than Macs and are “the most intelligent Windows PCs ever built.”

Based on Microsoft’s demo, Copilot+ PCs will enable users to easily find what has been worked on on a PC with Recall; to generate and refine AI images directly using Cocreator; and to translate audio from 40+ languages into English with Live Captions. These features are going to integrate GAI into core use cases.

Figure 2: Copilot+ PC = The AI Laptop

3) Copilot stack enhancements: With every layer of the tech stack changing, Nadella gave an overview of these in his keynote. The improvements to Azure jumped out and are projected to enable cheaper and faster capabilities for training and inferencing. According to Nadella, the Microsoft Azure end-to-end systems optimization from the data center to the network is designed to use less power and reduce the cost of AI. At the silicon layer, Microsoft can dynamically map workloads to the best accelerated AI hardware to produce better performance.

Microsoft’s end-to-end approach is helping Azure to scale. Over the past six months, Microsoft has added 30x supercomputing power to Azure. Microsoft’s partnership with Nvidia, which spans the entirety of the Copilot stack, will use both Hopper (H200) and Blackwell (B100 and GB200) to train and optimize both LLMs and small language models, according to Nadella.

Given the enhancements to the tech stack, how can more scale, automation, and efficiency in their tech stack support further revenue margins?

Figure 3: Microsoft Copilot

Figure 4: Microsoft consensus estimates

The bottom line

The potential for AI developers to create the next generation of must-have applications at scale has only just started. Over the next 4-5 years, there will likely be significant CapEx investment in data centers, development, and integration to ready the world for what’s to come next. The magnitude of the shifts is likely to blur the lines between cloud, chip, software, and search providers. Both workplaces and consumers are likely to win in the end with enhanced efficiency and productivity coupled with a better-curated workflow. Like it or not, AI is off and running.